-

Why Self-Host Your Anki Sync?

Anki is the gold standard for spaced repetition learning — used by medical students, language learners, and lifelong learners worldwide. By default, Anki syncs through AnkiWeb, Anki’s official cloud service. But there are good reasons to run your own sync server: full ownership of your data, no upload limits, the ability to share a server with a study group, and the peace of mind that comes with keeping everything on your own hardware.

Anki Sync Server Enhanced wraps the official Anki sync binary in a production-ready Docker image with features you’d expect from a proper self-hosted service — and it’s now submitted to the TrueNAS Community App Catalog for one-click deployment.

What’s Included

🔐User ManagementCreate sync accounts via environment variables. No database setup required.

🔒Optional TLSBuilt-in Caddy reverse proxy for automatic HTTPS with Let’s Encrypt or custom certs.

💾Automated BackupsScheduled backups with configurable retention and S3-compatible storage support.

📊Metrics & DashboardPrometheus-compatible metrics endpoint and optional web dashboard for monitoring.

🐳Docker NativeLightweight Debian-based image. Runs as non-root. Healthcheck included.

⚡TrueNAS ReadySubmitted to the Community App Catalog. Persistent storage, configurable ports, resource limits.

How It Works

Anki Desktop / Mobile → Anki Sync Server Enhanced → TrueNAS StorageYour Anki clients sync directly to your TrueNAS server over your local network or via Tailscale/WireGuard.

The server runs the official

anki-sync-serverRust binary — the same code that powers AnkiWeb — inside a hardened container. Point your Anki desktop or mobile app at your server’s URL, and syncing works exactly like it does with AnkiWeb, just on your own infrastructure.TrueNAS Installation

Once the app is accepted into the Community train, installation is straightforward from the TrueNAS UI. In the meantime, you can deploy it as a Custom App using the Docker image directly.

PR Status: The app has been submitted to the TrueNAS Community App Catalog via PR #4282 and is awaiting review. Track progress on the app request issue #4281.To deploy as a Custom App right now, use these settings:

Connecting Your Anki Client

After the server is running, configure your Anki client to use it. In Anki Desktop, go to Tools → Preferences → Syncing and set the custom sync URL to your server address, for example

http://your-truenas-ip:8080. On AnkiDroid, the setting is under Settings → Sync → Custom sync server. On AnkiMobile (iOS), look under Settings → Syncing → Custom Server.Then simply sync as usual — your Anki client will talk to your self-hosted server instead of AnkiWeb.

Building It: Lessons from TrueNAS App Development

Packaging a Docker image as a TrueNAS app turned out to involve a few surprises worth sharing for anyone considering contributing to the catalog.

TrueNAS apps use a Jinja2 templating system backed by a Python rendering library — not raw docker-compose files. Your template calls methods like

Render(values),c1.add_port(), andc1.healthcheck.set_test()which generate a validated compose file at deploy time. This means you get built-in support for permissions init containers, resource limits, and security hardening for free.One gotcha: TrueNAS runs containers as UID/GID 568 (the

appsuser), not root. If your entrypoint writes to files owned by a different user, it will fail silently or crash. We hit this with astart_time.txtwrite and had to make it non-fatal. Another: the Anki sync server returns a 404 on/(it has no landing page), so the defaultcurl --failhealthcheck marks the container as unhealthy. Switching to a TCP healthcheck solved it cleanly.The TrueNAS CI tooling is solid — a single

ci.pyscript renders your template, validates the compose output, spins up containers, and checks health status. If the healthcheck fails, it dumps full container logs and inspect data, making debugging fast.Get Involved

Ready to Self-Host Your Anki Sync?

Deploy it on TrueNAS today or star the project on GitHub to follow development.

Anki TrueNAS Self-Hosted Docker Spaced Repetition Open Source Homelab -

AI • Model Release

Claude Opus 4.6: Anthropic’s Most Capable Model Yet

Published February 6, 2026 — 8 min read

On February 5, 2026, Anthropic released Claude Opus 4.6 — its most powerful AI model to date. Arriving just three months after Opus 4.5, this update delivers a massive expansion to the context window, a new “agent teams” paradigm for parallel task execution, and benchmark scores that surpass both OpenAI’s GPT-5.2 and Google’s Gemini 3 Pro across a range of evaluations.

Whether you’re a developer building agentic workflows, a knowledge worker producing professional documents, or a researcher wrestling with enormous datasets, Opus 4.6 marks a tangible leap in what an AI model can handle in a single session.

1M Token context window (beta)128K Max output tokens68.8% ARC AGI 2 score$5 / $25 Input / output per 1M tokensWhat’s New in Opus 4.6

While the version bump from 4.5 to 4.6 may seem incremental, the changes under the hood are substantial. Anthropic has focused on three pillars: reasoning depth, context capacity, and agentic execution.

⚡1 Million Token Context Window

A 5× increase over the previous 200K limit. Opus 4.6 scores 76% on the MRCR v2 needle-in-a-haystack benchmark at 1M tokens, compared to just 18.5% for Sonnet 4.5 — a qualitative shift in usable context.🤖Agent Teams in Claude Code

Multiple specialised agents can now split a task and work in parallel — one on the frontend, one on the API, one on a migration — coordinating autonomously. Anthropic reports a roughly 30% reduction in end-to-end task runtime.🧠Adaptive Thinking

Replaces the binary on/off extended thinking toggle. Opus 4.6 dynamically decides how much reasoning effort a prompt requires. Four effort levels (low, medium, high, max) give developers fine-grained cost–speed–quality control.♾️Context Compaction API

A new beta feature that automatically summarises older context as conversations grow, enabling effectively infinite sessions without manual truncation or sliding-window hacks.📊Claude in PowerPoint & Excel Updates

Claude now operates as a side panel inside PowerPoint, respecting your slide masters and layouts. Excel gets unstructured data support and longer workflows for paid subscribers.Benchmark Breakdown

Opus 4.6 sets new state-of-the-art scores on several major evaluations. The most striking result is on ARC AGI 2, a benchmark designed to measure novel problem-solving that is easy for humans but notoriously hard for AI. Opus 4.6 scored 68.8% — nearly double Opus 4.5’s 37.6% and well ahead of GPT-5.2 (54.2%) and Gemini 3 Pro (45.1%).

Benchmark Opus 4.6 Opus 4.5 GPT-5.2 Gemini 3 Pro Terminal Bench 2.0 65.4% 59.8% — — OSWorld (Agentic) 72.7% 66.3% < 72.7% < 72.7% ARC AGI 2 68.8% 37.6% 54.2% 45.1% MRCR v2 (1M ctx) 76% — — — Humanity’s Last Exam #1 — — — Beyond the headline numbers, Opus 4.6 also tops the GDPval-AA benchmark for economically valuable knowledge work, outperforming GPT-5.2 by approximately 144 ELO points. In life sciences, it delivers nearly twice the performance of its predecessor on computational biology, structural biology, organic chemistry, and phylogenetics tests.

Coding and Developer Impact

Coding has always been a strength of the Opus line, and 4.6 takes it further. The model plans more carefully before generating code, catches its own mistakes through improved self-review, and sustains agentic tasks for longer without losing coherence. For large codebases, Anthropic claims it can now handle autonomous code review, debugging, and refactoring across repositories that would have previously required human intervention.

“Opus 4.6 is a model that makes the shift from chatbot to genuine work partner really concrete for our users.” — Scott White, Head of Product, Anthropic

The new agent teams feature in Claude Code is particularly noteworthy. Rather than a single agent working sequentially, developers can now spin up parallel agents that own distinct parts of a task. Anthropic’s example: one agent handles the frontend, another the API layer, and a third manages database migrations — all coordinating autonomously. This is available as a research preview and represents a meaningful step towards multi-agent orchestration out of the box.

Enterprise and Knowledge Work

Anthropic has been explicit about targeting enterprise workflows with this release. Roughly 80% of the company’s business comes from enterprise customers, and Opus 4.6 is tuned for the kind of work they care about: financial analysis, legal research, document production, and multi-step research tasks.

The model now leads on the Finance Agent benchmark and TaxEval by Vals AI. Combined with the expanded context window, analysts can feed entire filings, market reports, and internal data into a single session and get coherent, cross-referenced outputs. Anthropic says Opus 4.6 produces documents, spreadsheets, and presentations that approach expert-created quality on the first pass, reducing the rework cycle significantly.

💡 Pricing Note Standard API pricing remains at $5 input / $25 output per million tokens — identical to Opus 4.5. Requests exceeding 200K input tokens are charged at a premium rate of $10 / $37.50 per MTok. The model is available via the Anthropic API, AWS Bedrock, Google Vertex AI, Microsoft Foundry, and directly on claude.ai.Availability and API Changes

Opus 4.6 is live now across all major platforms. The API model identifier is simply

claude-opus-4-6— note the simplified naming without a date suffix. It’s available on the Anthropic API, AWS Bedrock, Google Vertex AI, Microsoft Foundry, and through GitHub Copilot for Pro, Pro+, Business, and Enterprise users.Developers should be aware of a few breaking changes: assistant message prefilling now returns a 400 error (migrate to structured outputs or system prompt instructions), the

output_formatparameter has moved tooutput_config.format, and the effort parameter is now generally available without a beta header.Safety and Alignment

Anthropic reports that the intelligence gains in Opus 4.6 have not come at the cost of safety. On their automated behavioural audit, the model showed low rates of misaligned behaviours including deception, sycophancy, and encouragement of user delusions — matching Opus 4.5’s results. Six new cybersecurity probes have been added to evaluate potential misuse vectors, and the model achieves a lower rate of unnecessary refusals compared to previous releases.

The Bigger Picture

Opus 4.6 arrives at a moment of intensifying competition. OpenAI announced its new OpenAI Frontier enterprise platform just hours before Anthropic’s launch, signalling a strategic pivot towards infrastructure and agent management rather than competing purely on benchmark scores. Google’s Gemini 3 Pro and Microsoft’s deep integration of Opus 4.6 into Foundry add further complexity to the landscape.

What sets this release apart is the combination of raw capability and practical utility. The 1M context window, agent teams, adaptive thinking, and context compaction aren’t just benchmark optimisations — they address real friction points that developers and knowledge workers hit daily. If Opus 4.5 moved Claude from “chatbot” to “useful tool,” Opus 4.6 positions it as a genuine work partner that can own entire workflows end-to-end.

For those already running Opus 4.5 in production, the upgrade path is a single API version change at the same price point. For everyone else, this is a strong argument to take a serious look at what Claude can do in 2026.

-

Two major releases of rfsrs are now available, bringing custom parameter support, SM-2 migration tools, and — the big one — parameter optimization. You can now train personalized FSRS parameters directly from your Anki review history using R.

Version 0.2.0: Custom Parameters & SM-2 Migration

Critical Bug Fix

Version 0.1.0 had a critical bug: custom parameters were silently ignored. The Scheduler stored your parameters but all Rust calls used the defaults. This is now fixed — your custom parameters actually work.

New Features in 0.2.0

Preview All Rating Outcomes

fsrs_repeat()returns all four rating outcomes (Again/Hard/Good/Easy) in a single call, matching the py-fsrs API:# See all outcomes at onceoutcomes <- fsrs_repeat(stability = 10,difficulty = 5,elapsed_days = 5,desired_retention = 0.9)outcomes$good$stability # 15.2outcomes$good$interval # 12 daysoutcomes$again$stability # 3.1SM-2 Migration

Migrating from Anki’s default algorithm?

fsrs_from_sm2()converts your existing ease factors and intervals to FSRS memory states:# Convert SM-2 state to FSRSstate <- fsrs_from_sm2(ease_factor = 2.5,interval = 30,sm2_retention = 0.9)state$stability # ~30 daysstate$difficulty # ~5Compute State from Review History

fsrs_memory_state()replays a sequence of reviews to compute the current memory state:# Replay review historystate <- fsrs_memory_state(ratings = c(3, 3, 4, 3), # Good, Good, Easy, Gooddelta_ts = c(0, 1, 3, 7) # Days since previous review)state$stabilitystate$difficultyVectorized Operations

fsrs_retrievability_vec()efficiently calculates recall probability for large datasets:# Calculate retrievability for 10,000 cardsretrievability <- fsrs_retrievability_vec(stability = cards$stability,elapsed_days = cards$days_since_review)Scheduler Improvements

Scheduler$preview_card()— see all outcomes without modifying the cardCard$clone_card()— deep copy a card for simulationsfsrs_simulate()— convenience function for learning simulations- State transitions now correctly match py-fsrs/rs-fsrs behavior

Version 0.3.0: Parameter Optimizer

The most requested feature: train your own FSRS parameters from your review history.

Why Optimize?

FSRS uses 21 parameters to predict when you’ll forget a card. The defaults work well for most people, but training custom parameters on your review history can improve scheduling accuracy by 10-30%.

New Functions in 0.3.0

fsrs_optimize()— Train custom parameters from your review historyfsrs_evaluate()— Measure how well parameters predict your memoryfsrs_anki_to_reviews()— Convert Anki’s revlog format for optimization

Optimize Your Parameters

Here’s how to train parameters using your Anki collection:

library(rfsrs)library(ankiR)# Get your review historyrevlog <- anki_revlog()# Convert to FSRS formatreviews <- fsrs_anki_to_reviews(revlog, min_reviews = 3)# Train your parameters (~1 minute)result <- fsrs_optimize(reviews)# Your personalized 21 parametersprint(result$parameters)# Use them with the Schedulerscheduler <- Scheduler$new(parameters = result$parameters,desired_retention = 0.9)How It Works

The optimizer uses machine learning (via the burn framework in Rust) to find parameters that best predict your actual recall patterns. It analyzes your review history to learn:

- How quickly you initially learn new cards

- How your memory decays over time

- How different ratings (Again/Hard/Good/Easy) affect retention

I tested it on my own collection with ~116,000 reviews across 5,800 cards — optimization took about 60 seconds.

Compare Parameters

Evaluate how well different parameters predict your memory:

# Compare default vs optimizeddefault_metrics <- fsrs_evaluate(reviews, NULL)custom_metrics <- fsrs_evaluate(reviews, result$parameters)cat("Default RMSE:", default_metrics$rmse_bins, "\n")cat("Custom RMSE:", custom_metrics$rmse_bins, "\n")Lower RMSE means better predictions.

Bug Fix

Fixed an issue where cards with only same-day reviews (all delta_t = 0) could cause the optimizer to fail. These are now correctly filtered out.

Installation

# From r-universe (recommended)install.packages("rfsrs", repos = "https://chrislongros.r-universe.dev")# Or from GitHubremotes::install_github("open-spaced-repetition/r-fsrs")Note: First build of v0.3.0 takes ~2 minutes due to compiling the ML framework. Subsequent builds are cached.

Full API Summary

Function Description Version fsrs_optimize()Train custom parameters 0.3.0 fsrs_evaluate()Evaluate parameter accuracy 0.3.0 fsrs_anki_to_reviews()Convert Anki revlog 0.3.0 fsrs_repeat()All 4 rating outcomes at once 0.2.0 fsrs_from_sm2()Convert from SM-2/Anki default 0.2.0 fsrs_memory_state()Compute state from review history 0.2.0 fsrs_retrievability_vec()Vectorized retrievability 0.2.0 Scheduler$preview_card()Preview outcomes without modifying 0.2.0 Card$clone_card()Deep copy a card 0.2.0 Links

Feedback and contributions welcome!

-

Damien Elmes says he’s stepping back from being Anki’s bottleneck—without saying goodbye.

Anki’s creator, Damien Elmes (often known as “dae”), shared a major update about the future of Anki: after nearly two decades of largely solo stewardship, he intends to gradually transition business operations and open-source stewardship to the team behind AnkiHub.

The headline reassurance is clear: Anki is intended to remain open source, and the transition is framed as a way to make development more sustainable, reduce single-person risk, and accelerate improvements—especially long-requested quality-of-life and UI polish.

Why this change is happening

Damien described a familiar pattern for long-running open-source projects: as Anki grew in popularity, demands on his time increased dramatically. Over time, the work shifted away from “deep work” (solving interesting technical problems) toward reactive support, constant interruptions, and the stress of feeling responsible for millions of users.

- Time pressure and stress: Unsustainably long hours began affecting well-being and relationships.

- Delegation limits: Paying prolific contributors helped, but many responsibilities remained hard to delegate.

- Bottleneck risk: Relying on one person puts the entire ecosystem at risk if they become unavailable.

Why AnkiHub?

According to the announcement, AnkiHub approached Damien about closer collaboration to improve Anki’s development pace. Through those conversations, Damien concluded that AnkiHub is better positioned to help Anki “take the next level,” in part because they’ve already built a team and operational capacity.

Crucially, Damien also emphasized that he has historically rejected buyout or investment offers due to fears of “enshittification” and misaligned incentives. This new transition is presented as different: it aims to preserve Anki’s values and open-source nature, while removing the single-person bottleneck.

“This is a step back for me rather than a goodbye — I will still be involved with the project, albeit at a more sustainable level.”

What AnkiHub says they believe

In their reply, AnkiHub emphasized that Anki is “bigger than any one person or organization” and belongs to the community. They echoed the principles associated with Anki’s development: respect for user agency, avoiding manipulative design patterns, and focusing on building genuinely useful tools rather than engagement traps.

Commitments and reassurances

- Open source: Anki’s core code is intended to remain open source.

- No investors: They state there are no outside investors influencing decisions.

- No pricing changes planned: They explicitly say no changes to Anki pricing are planned.

- Not a financial rescue: They say Anki is not in financial trouble; this is about improving capacity and resilience.

- Mobile apps continue: They say mobile apps will remain supported and maintained.

- AnkiDroid remains independent: They state there are no plans/agreements changing AnkiDroid’s self-governance.

What might improve (and why users should care)

If the transition works as intended, users may see benefits in areas that are hard to prioritize under constant time pressure:

- Faster development: More people can work without everything bottlenecking through one person.

- UI/UX polish: Professional design support to make Anki more approachable without losing power.

- Better onboarding: Improved first-run experience and fewer rough edges for beginners.

- Stronger add-on ecosystem: Clearer APIs, better docs, fewer breaking changes, more predictable releases.

- Lower “bus factor”: Reduced risk if any one contributor disappears.

Open questions

AnkiHub also acknowledged that many details are still undecided and invited community input. Areas still being worked out include:

- Governance: How decisions are made, who has final say, and how community feedback is incorporated.

- Roadmap: What gets built when, and how priorities are balanced.

- Transition mechanics: How support scales up without breaking what already works.

FAQ

Will Anki remain open source?

Yes. Both Damien and AnkiHub explicitly frame the transition around keeping Anki’s core open source and aligned with the principles the project has followed for years.

Is this a sale or VC takeover?

The announcement positions this as a stewardship transition, not a typical investor-led acquisition. AnkiHub states there are no outside investors involved.

Are pricing changes coming?

AnkiHub says no pricing changes are planned and emphasizes affordability and accessibility.

What about mobile and AnkiDroid?

They say mobile apps will remain supported. AnkiDroid is described as continuing as an independent, self-governed open-source project.

Bottom line

Damien isn’t leaving—he’s stepping back to a more sustainable role. The goal is to remove a long-standing bottleneck, reduce ecosystem risk, and speed up improvements without compromising what makes Anki special.

If you’ve wanted faster progress, better UI polish, and a more resilient future for Anki—this transition is designed to make that possible, while keeping the project open source and community-oriented.

Published: February 2, 2026 • Category: Announcements • Tags: Anki, Open Source, AnkiHub, Study Tools

-

After years of using Anki for medical school, I finally got tired of relying on AnkiWeb for syncing. Privacy concerns, sync limits, and the occasional downtime pushed me to self-host. The problem? The official Anki project provides source code but no pre-built Docker image. Building from source every time there’s an update? No thanks.

So I built Anki Sync Server Enhanced — a production-ready Docker image with all the features self-hosters actually need.

Why Self-Host Your Anki Sync?

- Privacy — Your flashcards stay on your server

- No limits — Sync as much as you want

- Speed — Local network sync is instant

- Control — Backups, monitoring, your rules

What Makes This Image Different?

I looked at existing solutions and found them lacking. Most require you to build from source or offer minimal features. Here’s what this image provides out of the box:

Feature Build from Source This Image Pre-built Docker image No Yes Auto-updates Manual Daily builds via GitHub Actions Multi-architecture Manual setup amd64 + arm64 Automated backups No Yes, with retention policy S3 backup upload No Yes (AWS, MinIO, Garage) Prometheus metrics No Yes Web dashboard No Yes Notifications No Discord, Telegram, Slack, Email Quick Start

Getting started takes less than a minute:

docker run -d \--name anki-sync \-p 8080:8080 \-e SYNC_USER1=myuser:mypassword \-v anki_data:/data \chrislongros/anki-sync-server-enhancedThat’s it. Your sync server is running.

Docker Compose (Recommended)

For a more complete setup with backups and monitoring:

services:anki-sync-server:image: chrislongros/anki-sync-server-enhanced:latestcontainer_name: anki-sync-serverports:- "8080:8080" # Sync server- "8081:8081" # Dashboard- "9090:9090" # Metricsenvironment:- SYNC_USER1=alice:password1- SYNC_USER2=bob:password2- TZ=Europe/Berlin- BACKUP_ENABLED=true- BACKUP_SCHEDULE=0 3 * * *- METRICS_ENABLED=true- DASHBOARD_ENABLED=truevolumes:- anki_data:/data- anki_backups:/backupsrestart: unless-stoppedvolumes:anki_data:anki_backups:The Dashboard

One feature I’m particularly proud of is the built-in web dashboard. Enable it with

DASHBOARD_ENABLED=trueand access it on port 8081.It shows:

- Server status and uptime

- Active users

- Data size

- Backup status

- Authentication statistics

- Feature toggles

No more SSH-ing into your server just to check if everything is running.

Configuring Your Anki Clients

Desktop (Windows/Mac/Linux)

- Open Anki

- Go to Tools → Preferences → Syncing

- Set the sync server to:

http://your-server:8080/ - Sync and enter your credentials

AnkiDroid

- Open Settings → Sync

- Set Custom sync server to:

http://your-server:8080/ - Set Media sync URL to:

http://your-server:8080/msync/

AnkiMobile (iOS)

- Settings → Sync → Custom server

- Enter:

http://your-server:8080/

Backup to S3

If you want offsite backups, the image supports S3-compatible storage (AWS S3, MinIO, Garage, Backblaze B2):

environment:- BACKUP_ENABLED=true- S3_BACKUP_ENABLED=true- S3_ENDPOINT=https://s3.example.com- S3_BUCKET=anki-backups- S3_ACCESS_KEY=your-access-key- S3_SECRET_KEY=your-secret-keyNotifications

Get notified when the server starts, stops, or completes a backup:

# Discord- NOTIFY_ENABLED=true- NOTIFY_TYPE=discord- NOTIFY_WEBHOOK_URL=https://discord.com/api/webhooks/...# Or Telegram, Slack, ntfy, generic webhookNAS Support

The image works great on NAS systems:

- TrueNAS SCALE — Install via Custom App with YAML

- Unraid — Template available in the repository

- Synology/QNAP — Standard Docker installation

CLI Tools Included

The image comes with helpful management scripts:

# User managementdocker exec anki-sync user-manager.sh listdocker exec anki-sync user-manager.sh add johndocker exec anki-sync user-manager.sh reset john newpassword# Backup managementdocker exec anki-sync backup.shdocker exec anki-sync restore.sh --listdocker exec anki-sync restore.sh backup_file.tar.gzLinks

- GitHub: github.com/chrislongros/anki-sync-server-enhanced

- Docker Hub: hub.docker.com/r/chrislongros/anki-sync-server-enhanced

Conclusion

If you’re using Anki seriously — for medical school, language learning, or any knowledge work — self-hosting your sync server gives you complete control over your data. This image makes it as simple as a single Docker command.

Questions or feature requests? Open an issue on GitHub or leave a comment below.

Happy studying!

-

Hotfixes

- Fixed a bug where the video aspect ratio is played incorrectly for the remote asset

- Fixed a bug where memory generation failed

- Fixed a bug where memories don’t show on the web until the page is refreshed

- Fixed a bug where the

Load original imageoption doesn’t render the image on iOS

What’s Changed

🐛 Bug fixes

- fix: deleting asset from asset-viewer on search results by @midzelis in #25596

- fix: escape handling in search asset viewer by @danieldietzler in #25621

- fix: correctly show owner in album options modal by @danieldietzler in #25618

- fix(server): don’t assume maintenance action is set by @insertish in #25622

- fix: album card ranges by @danieldietzler in #25639

- fix(mobile): show controls by default on motion photos by @goalie2002 in #25638

- fix: escape handling by @danieldietzler in #25627

- fix(mobile): set correct system-ui mode on asset viewer init by @goalie2002 in #25610

- fix(mobile): actually load original image by @mertalev in #25646

- fix: width and height migration issue by @alextran1502 in #25643

- fix: memory lane by @jrasm91 in #25652

- fix: memory generation by @jrasm91 in #25650

- fix(mobile): tall image scrolling by @ByteSizedMarius in #25649

-

Immich, the popular open-source, self-hosted photo and video management solution, has launched a community-driven initiative to improve its metadata handling capabilities. Through the new EXIF Dataset project, users can contribute their photos to help train and improve Immich’s EXIF parsing and metadata extraction features.

I recently contributed some of my own photos to the project, and I want to share how easy and straightforward the process is. If you’re an Immich user (or simply an open-source enthusiast), this is a fantastic way to give back to the community.

What is the EXIF Dataset Project?

EXIF (Exchangeable Image File Format) data is the metadata embedded in your photos by your camera or smartphone. This includes information like the camera make and model, date and time, GPS coordinates, lens information, and much more. Immich uses this data extensively to organize your photo library, enable timeline views, power location-based features, and facilitate powerful search capabilities.

The EXIF Dataset project at datasets.immich.app/projects/exif allows community members to contribute photos along with their intact EXIF metadata. This crowdsourced dataset helps the Immich team understand how different cameras and devices encode their metadata, ultimately improving compatibility and parsing accuracy for everyone.

🔗 Contribute Now: Visit datasets.immich.app/projects/exif to start contributing your photos to the EXIF Dataset.

My Contribution Experience

The contribution process is remarkably simple and well-designed. Here’s how it works:

Step 1: Upload Your Photos

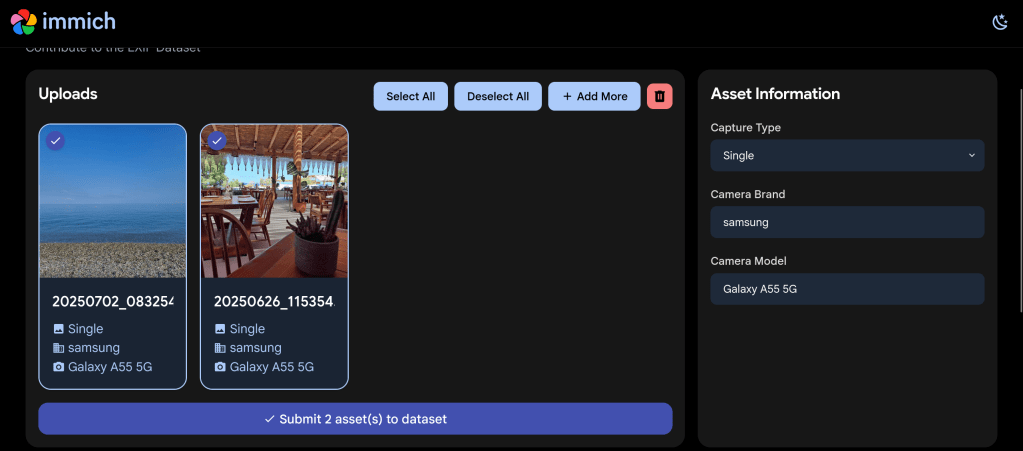

After navigating to the EXIF Dataset project page, you’re greeted with a clean upload interface. I uploaded a couple of photos taken with my Samsung Galaxy A55 5G – a beach landscape shot and a photo from a beachside restaurant.

The clean upload interface showing my first selected photo with its EXIF metadata displayed

The interface immediately displays the extracted EXIF information on the right side, including the capture type (Single), camera brand (Samsung), and camera model (Galaxy A55 5G). This lets you verify that your photos contain the metadata you want to contribute.

Step 2: Select Photos for Submission

You can upload multiple photos at once using the “+ Add More” button. I selected both of my photos for contribution – each showing clearly with a checkmark indicating selection.

Two photos selected and ready to submit to the EXIF Dataset

The interface provides convenient “Select All” and “Deselect All” buttons, as well as a delete option if you change your mind about any uploads.

Step 3: Agree to the CC0 License

When you click “Submit asset(s) to dataset”, a Dataset Agreement dialog appears. This is where the legal side of your contribution is handled transparently.

The Dataset Agreement confirms your photos will be released under the CC0 public domain license

ℹ️ About CC0: The CC0 (Creative Commons Zero) license means you’re releasing your contributed photos into the public domain. This allows the Immich project (and anyone else) to use the images freely for any purpose. Make sure you only upload photos you own the rights to and are comfortable sharing publicly.

The agreement requires you to confirm two things:

- You agree to release the uploaded assets under the CC0 license into the public domain

- The files have not been modified in any way that would alter their original content or metadata

You also provide a contact email in case the Immich team has any questions about your upload.

Why Should You Contribute?

Contributing to the EXIF Dataset helps improve Immich in several ways:

- Better Device Support: By collecting EXIF samples from many different cameras and phones, Immich can improve its parsing for devices that may have quirks or non-standard metadata encoding

- Improved Metadata Extraction: The dataset helps identify edge cases and unusual metadata formats that might otherwise go unnoticed

- Community-Driven Development: Your contribution directly influences the quality of an open-source project used by thousands of self-hosters worldwide

- Supporting Privacy-Focused Software: Immich is a privacy-respecting alternative to cloud-based photo services like Google Photos – your contribution helps make it even better

Tips for Contributing

To make your contribution as valuable as possible:

- Contribute from different devices: If you have photos from older cameras, different smartphone brands, or professional equipment, these are especially valuable

- Keep metadata intact: Don’t strip or modify the EXIF data before uploading – the original metadata is exactly what’s needed

- Consider variety: Photos taken in different conditions (indoor, outdoor, various lighting) may contain different metadata values

- Check your ownership: Only contribute photos you’ve taken yourself or have explicit rights to share

About Immich

For those unfamiliar with Immich, it’s a high-performance, self-hosted photo and video management solution that offers features comparable to Google Photos – but with full control over your data. Key features include automatic backup from mobile devices, facial recognition, smart search, timeline views, shared albums, and much more.

Immich is developed under the AGPL-3.0 license and is backed by FUTO, an organization dedicated to developing privacy-preserving technology. The project has grown tremendously, with over 77,000 stars on GitHub, making it one of the most popular self-hosted applications available.

🏠 Self-Host Immich: Get started with Immich at immich.app – available for Docker, TrueNAS, Unraid, and other platforms.

Conclusion

Contributing to the Immich EXIF Dataset is a simple yet meaningful way to support open-source software development. The process takes just a few minutes, and your contribution will help improve photo management for the entire Immich community.

Head over to datasets.immich.app/projects/exif today and share some of your photos. Every contribution counts!

-

GitHub has officially rolled out the improved “Files Changed” experience as the default for all users. After months in public preview, this redesigned pull request review interface brings significant improvements to performance, accessibility, and overall productivity when reviewing code changes.

Key Improvements Over the Classic Experience

The new interface maintains familiarity for existing users while adding several notable enhancements:

Comment on Any Line

Previously, you could only comment on lines directly surrounding a change. Now you can add review comments to any line in a changed file, making it easier to provide context or point out related code that might need attention.

View PR Description Without Switching Pages

A new Overview panel lets you view the pull request description directly from the “Files changed” page. No more jumping back and forth between tabs to remember what the PR is supposed to accomplish.

Enhanced File Tree

The file tree sidebar is now resizable and includes visual indicators showing which files have comments, errors, or warnings. This makes it much easier to track your progress when reviewing large PRs with many changed files.

Draft Comments That Persist

Comments and replies are now saved locally in your browser. If you accidentally close the tab or refresh the page, your in-progress feedback won’t be lost.

Fewer Page Reloads

Actions like refreshing to pull in new changes, switching between split and unified diff modes, and other common tasks no longer force a full page reload. The interface feels much snappier as a result.

Improved Accessibility

The new experience includes better keyboard navigation, screen reader landmarks, and increased line spacing options to make code review accessible to everyone.

Experimental Mode for Large Pull Requests

One of the most interesting additions is an experimental mode specifically designed for reviewing large pull requests. This mode uses virtualization to reduce the number of DOM elements the browser needs to manage, significantly improving memory usage and page responsiveness—especially on slower machines.

When viewing a large PR, you’ll see a banner offering to try this experimental mode. There are some trade-offs: browser find functionality, text selection across the entire page, printing, and some browser extensions may not work as expected since the full diff isn’t rendered in the DOM. You can switch back to single file mode at any time.

Bug Fixes and Polish

GitHub has also addressed numerous issues including problems with suggested changes being applied incorrectly, comment workflow bugs, interaction lag (especially on Safari), and various UI quirks like scroll positioning and sticky headers behaving unexpectedly.

Opting Out

If you prefer the classic experience, you can still opt out through your settings. However, given the improvements in this new version, it’s worth giving it a fair trial before switching back.

Providing Feedback

GitHub is actively collecting feedback on the new experience. If you encounter issues or have suggestions, you can participate in the “Files Changed” preview feedback discussion on GitHub.

-

If you’re using Tailscale with Mullvad VPN (either via the native Tailscale integration or standalone) and Firefox’s DNS over HTTPS (DoH), you might suddenly find yourself unable to access your Tailscale services via their

*.ts.nethostnames—even though everything worked fine before.The symptoms are frustrating:

tailscale pingworks,digresolves the hostname correctly, but Firefox just refuses to connect.Why This Happens

When you enable DNS over HTTPS in Firefox (especially with “Max Protection” mode), Firefox bypasses your system’s DNS resolver entirely and sends all DNS queries directly to your chosen DoH provider—in this case, Mullvad’s DNS server at

https://base.dns.mullvad.net/dns-query.The problem? Mullvad’s public DNS server has no idea what

my-server.my-tailnet.ts.netis. That’s a private hostname that only Tailscale’s MagicDNS (running at100.100.100.100) knows how to resolve.So while your system can resolve the hostname just fine:

$ dig my-server.my-tailnet.ts.net;; ANSWER SECTION:my-server.my-tailnet.ts.net. 600 IN A 100.x.x.x;; SERVER: 100.100.100.100#53(100.100.100.100) (UDP)Firefox completely ignores this and asks Mullvad instead, which returns nothing.

The Solution

Firefox provides a way to exclude specific domains from DoH, forcing it to fall back to system DNS for those domains. Here’s how to set it up:

- Open Firefox and navigate to

about:config - Search for

network.trr.excluded-domains - Add

ts.netto the list (comma-separated if there are existing entries)

For example:

ts.netOr if you have other exclusions:

example.local, ts.netThis tells Firefox: “For any domain ending in

.ts.net, use the system DNS resolver instead of DoH.” Since your system DNS is controlled by Tailscale’s MagicDNS, the hostname will resolve correctly.The Gotcha: Old Tailnet Names

Here’s a subtle issue that can trip you up: if you previously had a different Tailscale account or renamed your tailnet, you might have an old, specific exclusion that no longer applies.

For example, you might have:

my-nas.old-tailnet.ts.netBut your current tailnet is

new-tailnet.ts.net. The old exclusion does nothing for your new tailnet!The fix is simple: instead of excluding specific tailnet hostnames, just exclude the entire

ts.netdomain. This covers all Tailscale hostnames, regardless of your tailnet name, now and in the future.Verifying the Fix

After making the change, you can verify everything is working:

- Test Tailscale connectivity (should already work):

tailscale ping your-machine-name - Test DNS resolution from the command line:

dig your-machine-name.your-tailnet.ts.net - Test in Firefox: Navigate to your Tailscale hostname—it should now load.

Summary

If you’re combining Firefox DoH with Tailscale:

- Firefox’s DoH bypasses Tailscale’s MagicDNS

- Add

ts.nettonetwork.trr.excluded-domainsinabout:config - Use

ts.net(not a specific tailnet name) to future-proof the setting

This gives you the best of both worlds: private DNS for general browsing via Mullvad, and working hostname resolution for your Tailscale network.

- Open Firefox and navigate to

-

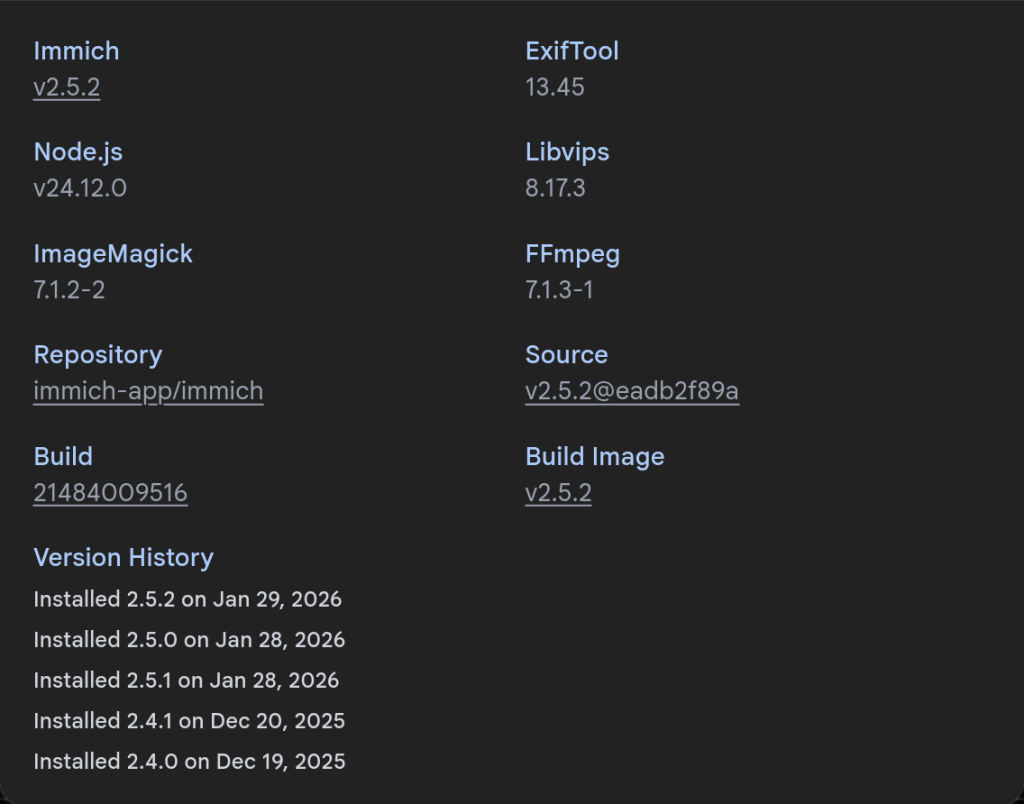

Happy New Year, self-hosters! The Immich team has kicked off 2026 with a bang, releasing version 2.5.0 – aptly named the “90,000 Stars Release” in celebration of reaching this impressive GitHub milestone. This release is packed with long-awaited features that significantly improve both the mobile and web experience. Let’s dive into what’s new.

Free Up Space: Finally Here

This feature has been one of the most requested since the early days of Immich (it has a 3-digit issue ID!). Free Up Space allows you to remove local media files from your mobile device that have already been successfully backed up to your Immich server.

Free Up Space accessible from the user profile panel Configuration options for Free Up Space The feature includes smart configuration options:

- Cutoff date: Only process photos and videos on or before a specified date

- Keep albums: Preserve specific albums (WhatsApp-related albums are kept by default)

- Keep favorites: Favorited assets stay on your device

- Keep on device: Option to always keep all photos or all videos

Before any files are removed, you’ll see a review screen showing exactly what will be deleted and how much storage you’ll reclaim. Deleted items go to your device’s native Trash, giving you a safety net.

Non-Destructive Photo Editing

Immich now supports non-destructive editing – a major enhancement for anyone who’s hesitated to edit photos for fear of losing the original. Edits are stored in the database while original files remain untouched. You can always revert to the original.

Click the edit icon to enter edit mode Currently supported editing operations:

- Cropping

- Rotation

- Mirroring

The editing interface with cropping, rotation, and mirroring tools Opening the editor on an edited asset loads existing edits for adjustment When downloading an edited asset, you get the edited version by default, but can also choose to download the original. Note that mobile editing still uses the old system for now – the non-destructive approach will come to mobile in a future release.

Web-Based Database Backup and Restore

Database management just got significantly easier. Previously, restoring an Immich instance required command-line access – a barrier for users new to self-hosting. Now, the entire backup and restore pipeline is built into the web UI.

You can restore from two locations:

Restore from the Administration → Maintenance page Restore from the Onboarding page on a fresh installation This is particularly valuable if you’ve ever worried about database corruption from power loss or system failures.

Upload Improvements

Foreground uploads on mobile have been significantly improved. The new implementation brings back reliable upload handling while adding concurrent uploads and proper support for assets with missing file extensions (common with DJI and Fusion Camera files).

Improved upload interface with concurrent upload support A notable improvement for iOS/iCloud users: uploads now send unique metadata to the server for faster checksum retrieval when reinstalling the app. To take advantage of this for existing uploads, go to App Settings → Sync Status and tap “Sync Cloud IDs” once.

Sync Cloud IDs to backfill metadata for existing uploads (iOS/iCloud users) Visual Refresh

The entire Immich experience has received a visual update across web, mobile, and documentation. A new font improves readability, especially for numbers and smaller text.

Refreshed visual design with improved typography The UI library has been integrated more deeply into the web app, providing more consistent components and better visual hierarchy.

More standardized and coherent UI components All icon buttons now include tooltips – no more guessing what a button does.

All icon buttons now show helpful tooltips Additional Highlights

Star Rating on Mobile

Mobile users can now rate their photos with stars, bringing feature parity with the web application.

Star rating now available on mobile Disable Admin Setup

New environment variable

IMMICH_ALLOW_SETUP=true|falselets you prevent the admin setup page from appearing after initial configuration – useful if your database ever gets accidentally reset.Fine-Grained API Permissions

New scoped permissions for API keys include:

map.read,map.search, andfolder.read.Progressive JPEGs

Image generation settings now include an option for progressive JPEGs, allowing supported browsers to render images progressively as they load.

New progressive JPEG option in image generation settings Slideshow Loop

Web slideshows can now automatically restart when they reach the end.

New loop option in slideshow settings Native HTTP Clients

All remote images now use optimized HTTP clients supporting HTTP/2 and HTTP/3. Images load faster, caching is improved, and the offline experience is more responsive with a larger cache size.

Important Notes

Mobile App Update Paused: As of the release, the team has temporarily halted the mobile app release due to some reported migration issues. Check the GitHub release page for the latest status.

Client Compatibility: Mobile clients must be updated to v2.5.0 to view edited versions of assets. Older clients will continue to see original images.

How to Update

Follow the standard update procedure for your deployment method. As always, ensure you have a backup before upgrading.

For the complete changelog including all bug fixes and documentation updates, check the full release notes on GitHub.

Support the Project

If you find Immich helpful, consider supporting the project by purchasing a product key at buy.immich.app or grabbing some merchandise at immich.store.

-

Introducing ankiR Stats: The Only Anki Addon with Time Series Analytics

I’m excited to announce the release of ankiR Stats, a new Anki addon that brings advanced statistical analysis to your flashcard reviews. If you’ve ever wondered about the patterns hidden in your study data, this addon is for you.

Why Another Stats Addon?

There are several statistics addons for Anki already – Review Heatmap, More Overview Stats, True Retention. They’re great for basic numbers. But none of them answer questions like:

- Is my retention trending up or down over time?

- What’s my weekly study pattern? Do I study more on weekends?

- Which days were unusually productive (or lazy)?

- How are my card intervals growing over months?

ankiR Stats answers all of these using the same statistical techniques data scientists use.

Features

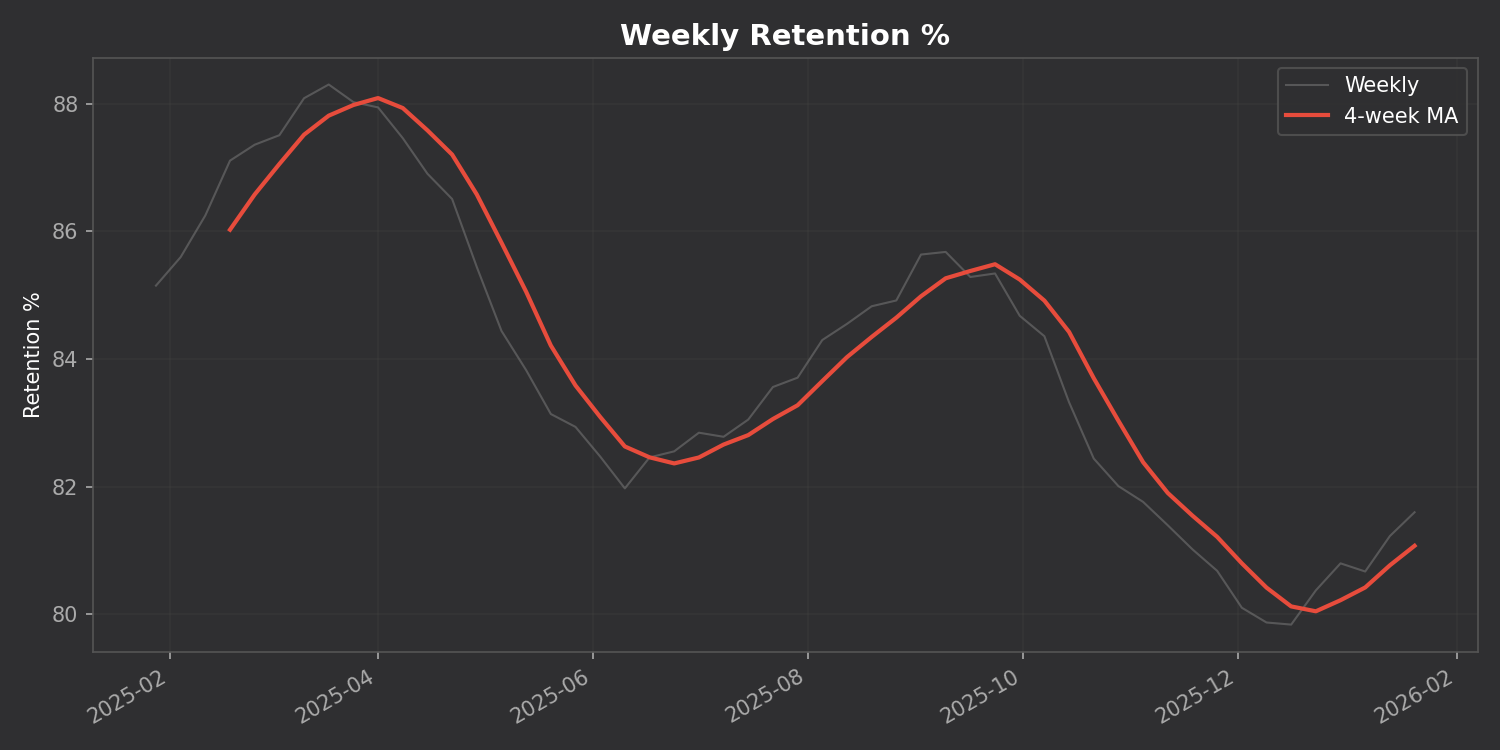

📊 Time Series Charts

Track your retention, reviews, and intervals over time with a 4-week moving average to smooth out the noise:

🗓️ GitHub-style Heatmap

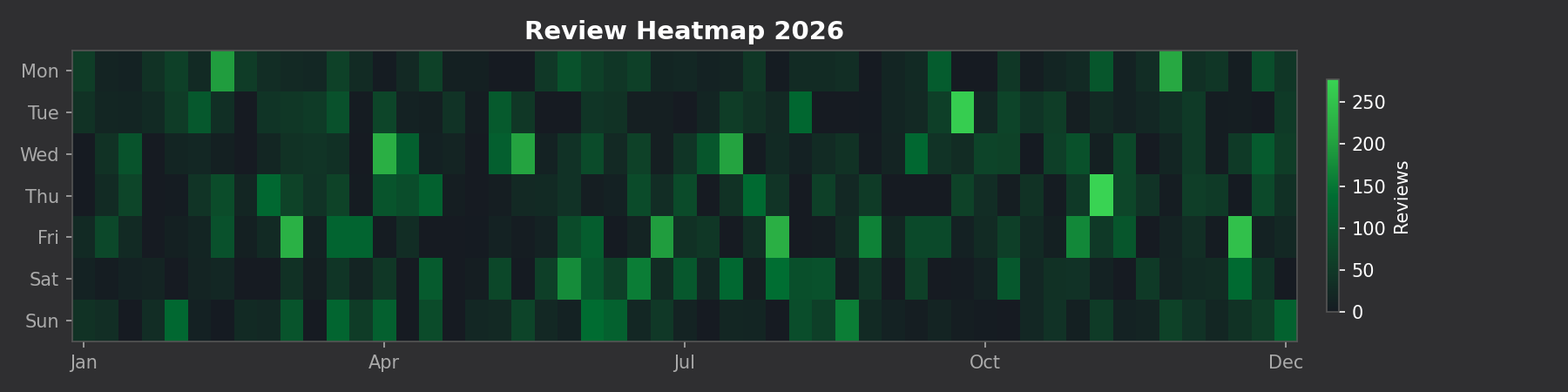

See your entire year of reviews at a glance:

🔬 Time Series Decomposition

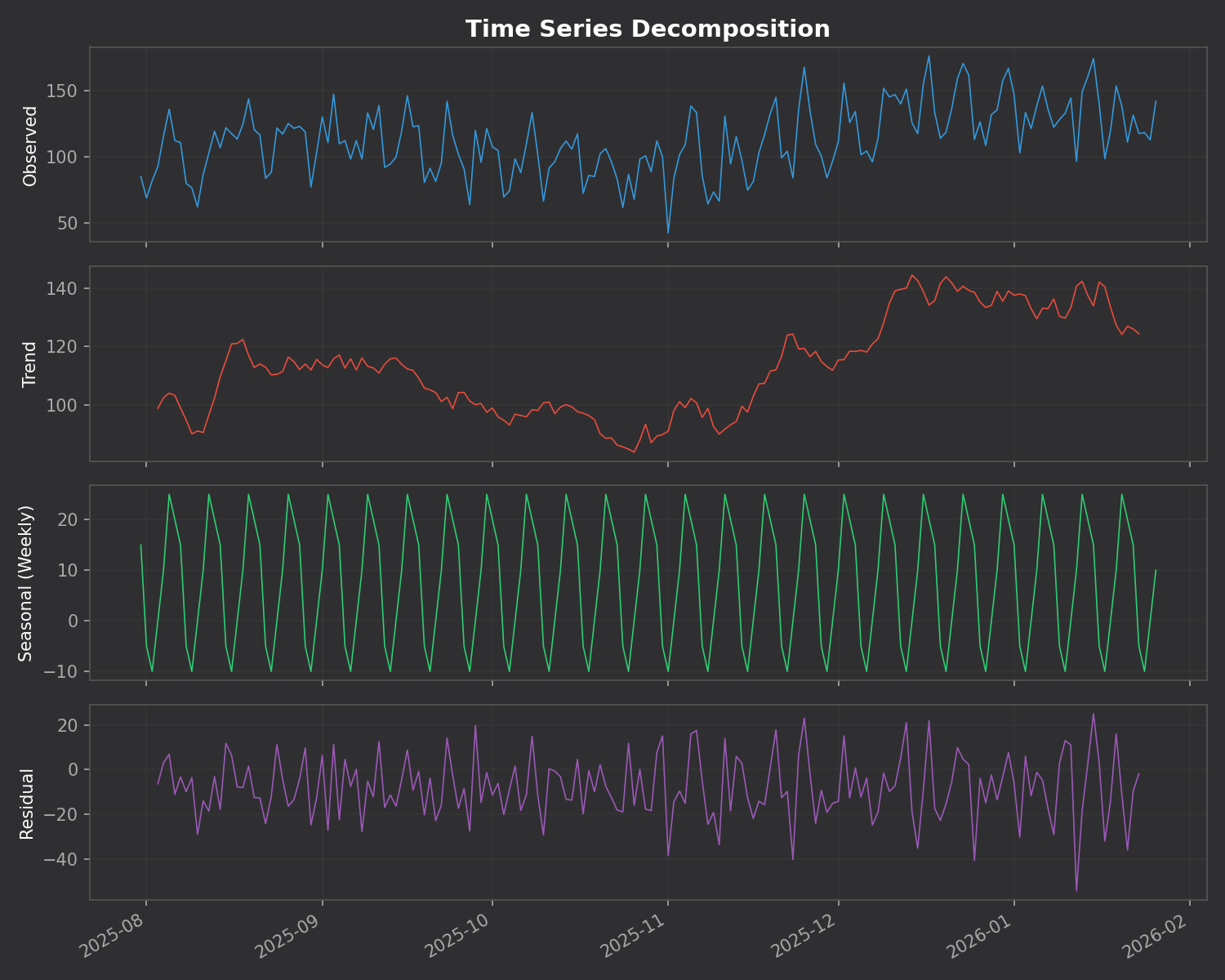

This is the killer feature. The addon breaks down your daily reviews into three components:

- Trend – Are you studying more or less over time?

- Seasonal – Your weekly pattern (which days you study most)

- Residual – Random variation that doesn’t fit the pattern

⚠️ Anomaly Detection

The addon automatically finds unusual study days using z-score analysis. Days where you studied way more (or less) than normal are flagged with their statistical significance.

No Dependencies

Unlike many addons that require you to install Python packages, ankiR Stats uses web-based charts (Chart.js). It works out of the box on Windows, Mac, and Linux.

Installation

- Open Anki

- Go to Tools → Add-ons → Get Add-ons

- Enter code:

419954163 - Restart Anki

- Access via Tools → ankiR Stats

Based on ankiR

This addon is a Python port of key features from ankiR, an R package I developed for comprehensive Anki analytics. The R package has 91 functions including forecasting, autocorrelation analysis, and FSRS integration – if you want even deeper analysis, check it out.

Open Source

The addon is open source and available on GitHub. Issues and contributions welcome!

Links

Let me know what you think in the comments!